The liveblog was a revelation for us at the Guardian. The sports desk had been doing them for years experimenting with different styles, methods and tone. And then about 3 years ago the news desk started using them liberally to great effect.

I think it was Matt Wells who suggested that perhaps the liveblog was *the* network-native format for news. I think that’s nearly right…though it’s less the ‘format’ of a liveblog than the activity powering the page that demonstrates where news editing in a networked world is going.

It’s about orchestrating the streams of data flowing across the Internet into a compelling use in one form or another. One way to render that data is the liveblog. Another is a map with placemarks. Another is a RSS feed. A stream of tweets. Storify. Etc.

I’m not talking about Big Data for news. There is certainly a very hairy challenge in big data investigations and intelligent data visualizations to give meaning to complex statistics and databases. But this is different.

I’m talking about telling stories by playing DJ to the beat of human observation pumping across the network.

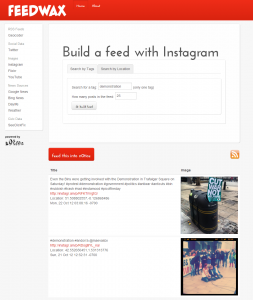

We’re working on one such experiment with a location-tagging tool we call FeedWax. It creates location-aware streams of data for you by looking across various media sources including Twitter, Instagram, YouTube, Google News, Daylife, etc.

The idea with FeedWax is to unify various types of data through shared contexts, beginning with location. These sources may only have a keyword to join them up or perhaps nothing at all, but when you add location they may begin sharing important meaning and relevance. The context of space and time is natural connective tissue, particularly when the words people use to describe something may vary.

We’ve been conducting experiments in orchestrated stream-based and map-based storytelling on n0tice for a while now. When you start crafting the inputs with tools like FeedWax you have what feels like a more frictionless mechanism for steering the flood of data that comes across Twitter, Instagram, Flickr, etc. into something interesting.

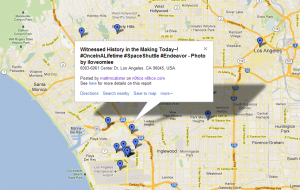

For example, when the space shuttle Endeavour flew its last flight and subsequently labored through the streets of LA there was no shortage of coverage from on-the-ground citizen reporters. I’d bet not one of them considered themselves a citizen reporter. They were just trying to get a photo of this awesome sight and share it, perhaps getting some acknowledgement in the process.

You can see the stream of images and tweets here: http://n0tice.com/search?q=endeavor+OR+endeavour. And you can see them all plotted on a map here: http://goo.gl/maps/osh8T.

Interestingly, the location of the photos gives you a very clear picture of the flight path. This is crowdmapping without requiring that anyone do anything they wouldn’t already do. It’s orchestrating streams that already exist.

This behavior isn’t exclusive to on-the-ground reporting. I’ve got a list of similar types of activities in a blog post here which includes task-based reporting like the search for computer scientist Jim Gray, the use of Ushahidi during the Haiti earthquake, the Guardian’s MPs Expenses project, etc. It’s also interesting to see how people like Jon Udell approach this problem with other data streams out there such as event and venue calendars.

Sometimes people refer to the art of code and code-as-art. What I see in my mind when I hear people say that is a giant global canvas in the form of a connected network, rivers of different colored paints in the form of data streams, and a range of paint brushes and paint strokes in the form of software and hardware.

The savvy editors in today’s world are learning from and working with these artists, using their tools and techniques to tease out the right mix of streams to tell stories that people care about. There’s no lack of material or tools to work with. Becoming network-native sometimes just means looking at the world through a different lens.